While voice recognition software has certainly improved in the two decades, it hasn’t exactly been the blockbuster tech that Ray Kurzweil predicted. My first experiments with the technology were playing around with Microsoft’s Speech API (circa Windows 95) and early versions of Dragon Naturally Speaking. Both were interesting as “toys” but didn’t work well enough for me to put them to practical use.

Since then, I’ve tried out new voice recognition software every few years and always came away thinking “Well, it’s better. But it’s still not very good.” For fans of the technology, it’s been a slow journey of disappointment. The engineering problems in building practical voice systems turned out to be much harder than anyone thought they’d be.

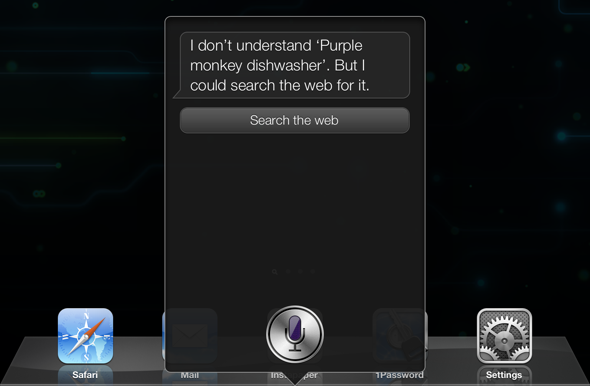

Human languages don’t follow the strict rules and grammar of programming languages and computer scientists have struggled to build software that can match the intention of someone’s speech to a query or action that can be accurately processed by software. Building code that understands “What is the best way to make bacon?” (Answer: in the oven, just saying.) in the countless number of ways a human might ask the question has been challenging.

Collect ALL the data

One of the tactics used by software engineers to figure out how to handle varied types of input is to build a database of potential input (in this case, human speech) and try to find common threads and patterns. It’s a bit of a brute-force approach, but helps engineers understand what types of input they need to build code for and in cases of similar input, helps reduce the overall code needed.

If you can code your software to know that questions like “What’s it going to be like outside tomorrow?” and “What’s the weather supposed to do?” are both questions about the weather forecast, processing human speech becomes a little easier. Obviously you wouldn’t want to (or even be able to) build code for every possible input, but this approach does give you a good base to build from.

In the past, capturing the volume of data needed to do a thorough analysis of speech wasn’t really an option for voice recognition researchers, cost being a major factor. It was simply too expensive to capture, store, and analyze a large enough sampling of voice data to push research forward.

Leveraging scale

Over the last few years, lowered costs of storage and computing power paired with a much larger population of internet users has made this type of data collection a lot cheaper and easier. In the case of Google (and to some extent Apple), features like Voice Search probably weren’t initially intended to be products in and of themselves, but capture points for the company to collect and analyze voice data so that they could improve future products.

Analysis of a massive database of voice data paired with what are likely some very smart algorithms helped Google build their latest update to Voice Search. For the more scientifically minded, Google’s research site has a lot of interesting information on this analysis work. And as you can see from the video, the results are impressive.

httpvh://www.youtube.com/watch?v=n2ZUSPecPRk

Welcome to the future

For people who have been following voice recognition, the recent uptick in progress is very exciting. Now that the field has gained some momentum, development will likely advance at a rapid pace. The “teaching” component of these systems will improve, enabling them to decipher natural language without human help and more products will include voice interfaces. It’s been a long time coming, but it’s finally starting to feel like the future.

Leave a Reply

You must be logged in to post a comment.